This post is a component of my coursework at the CUNY Graduate Center in the M.S. in Data Analysis and Design, for a special topics course about Large Language Models taught by Professor Michelle A. McSweeney. I extend my gratitude to her and all my fellow students for embarking on this journey together, seeking to comprehend the technological and societal implications of Large Language Models, especially considering the significant developments in this field, which has caused considerable anxiety in many communities and, in particular, education.

Background

A significant part of my introduction to interrogating the promises of educational technology, particularly those made by corporations selling a future that is better for students and easier for teachers, involved the writings and presentations of educational technology critic Audrey Watters. For over a decade on her educational technology blog, “Hack Education,” she consistently challenged prevailing narratives and questioned the motivations of the ‘techno-solutionism’ presented by companies, continually providing historical context to her critiques and highlighting the recurring pattern of proposing technology as a one-size-fits-all solution.

The cycle continues with Large Language Models (LLMs) receiving enormous attention as we weather this latest technology rage. I thought I’d try to take Watters’ advice and attempt to “ask better questions about the promises technology companies make.”1 And, in particular, interrogate predictions regarding who is making them, who they benefit, and who they ignore.2

Right now, we have a co-founder behind the popular mathematics and graphing software Mathematica, Theodore Gray, who was quoted in Nature saying, “There’s now a real possibility that one could make educational software that works.”3 This quote may have been cherry-picked from a broader context, but it implies that all past software supporting learning was terrible, and now there is finally something about LLMs that will make a difference. Gray himself is working on an LLM based tutoring system, providing some clue to his thinking. Additionally, there is the promise of fighting ‘teacher burnout’ at a time when many educators are leaving, and while at the moment there is a shortage of at least 30,000.4 And research from Rebecca Passonneau at Penn State, who has built a custom LLM to review science-based essays at the middle school level, is aiming to “offload the burden of assessment from the teacher, so that more writing assignments can be integrated into middle school science curricula.”5

Major players, including Khan Academy, have begun experiments with “Khanmigo,” an AI assistant built off of Open AI’s LLM to support students struggling through their tutorials. Additionally, there is MagicSchool that helps teachers “build lesson plans, write assessments, differentiate, write IEPs, communicate clearly, and more.”6

There appears to be a combination of efforts here to build tutoring tools to support student learning and provide teachers with resources to ‘unburden’ their workload. Clearly, there will be benefits to companies that are successful in building tools sold to support teaching and learning. And there may be real cases where students can receive timely feedback where they would not otherwise. Teachers may also find opportunities to build lesson plans more quickly and prevent repetition.

In the context of Audrey Watters’ critique, the fundamental question emerges: What do these promises of educational technology, particularly those associated with Large Language Models (LLMs), neglect or overlook? Watters underscores what she believes is too often the prevailing objective, which she articulates as “make teaching and learning cheaper, faster, more scalable, more efficient. And where possible, practical, and politically expedient: replace expensive, human labor with the labor of the machine.”7 This goal, framed in terms of efficiency and cost-effectiveness, becomes especially relevant when considering the broader educational landscape during the pandemic.

Watters was addressing these concerns during the article’s origin, which was a printed version of a talk presented to Brandeis students in a class focused on “Race Before Race: Premodern Critical Race Studies.” At that time, the education system was grappling with the challenges imposed by the COVID-19 pandemic, leading to a year of online-only schooling for most students. In this environment, Watters was interrogating the tools designed to prevent cheating, such as text analysis platforms like Turnitin, and surveillance tools for online proctoring like ProctorU. In her discussion, she highlighted a Twitter thread where a parent described their middle school son receiving low grades from an Edgenuity auto-grading tool. The parent then learned to ‘hack’ the grader by using sophisticated language, prompting Watters to comment on the thread, “if (it’s) not worth a teacher reading the assignment/assessment, then it’s not worth the student writing.”8 She vehemently opposed the use of robot grading, seeing it as degrading and raising the crucial question of a future where some students receive human feedback while others receive automated responses.

At the core of what Watters identifies as ‘ignored’ in these promises of the future lies a fundamental issue: who gets the privilege of genuine interaction with their teacher, and who will be relegated to interactions with machines? The use of robots, including LLMs, may have its merits in supporting certain aspects of learning. However, it consistently overlooks the intangible but invaluable opportunities lost when there is no social contact between teacher and learner, and among learners themselves. This absence of socialization in the learning environment, a crucial element that these tools fail to measure, raises significant concerns. The promise of an education that goes beyond mere content delivery, offering the opportunity to learn, interact, and build relationships within a supportive environment, is often disregarded in the pursuit of technological solutions.

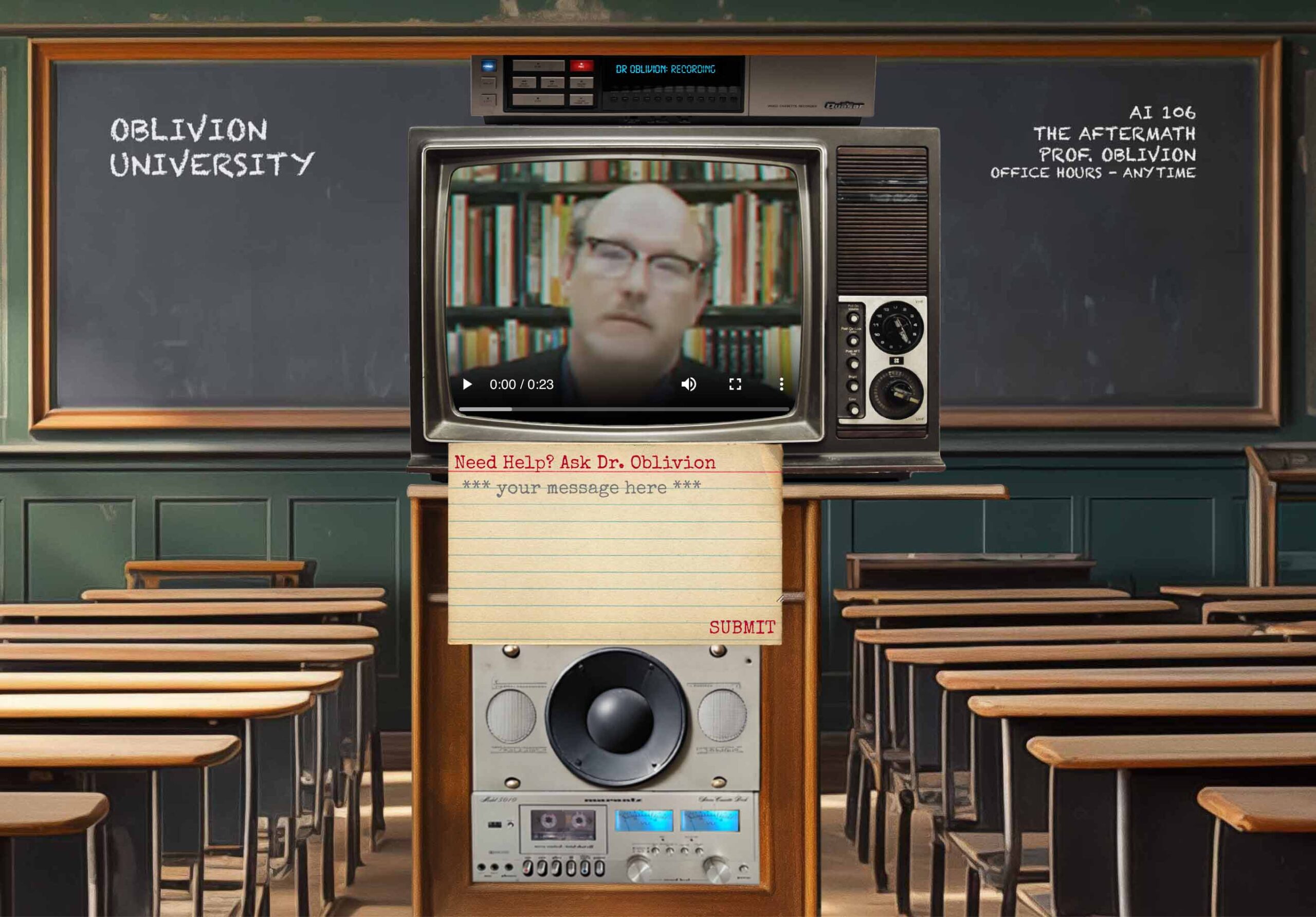

Which finally brings us to what is the purpose of the project of Oblivion.University and Dr. Oblivion himself, our robot teacher to be used next semester in a special section of DS106 Digital Storytelling at the University of Mary Washington, as well as a couple of other universities? Why build a teacher that will help students respond to their questions about AI, media, and technology? And why give him a voice and a face? Well, hopefully, with the help of Jim Groom and the students that he’s engaging and asking to interrogate generative tools such as LLMs and generative art tools like Stable Diffusion, they will together build a narrative that disrupts the ‘promises’ made by corporations with their ed tech tools and broadens their understanding of imagining classrooms without human teachers and what that could imply for the future of society.

Introduction

In the summer of 2011, a section of the Digital Storytelling course, DS106 at the University of Mary Washington, facilitated collaboration between registered students and online participants. Managed through the DS106.us mothership site, the open structure allowed freedom in creating assignments, empowering students to control their “digital identities” within personal domains, departing from restrictive Learning Management Systems.

Throughout the course the instructor Jim Groom, role-played a character, Dr. Oblivion, inspired by a similar character form the 1983 David Cronenberg film Videodrome. Dr. Oblivion delivered three lectures (1,2,3) before “disappearing” and his “teaching assistant” Jim Groom was required to takeover the class. Students and online participants collaboratively crafted a digital narrative while searching for him across the internet.

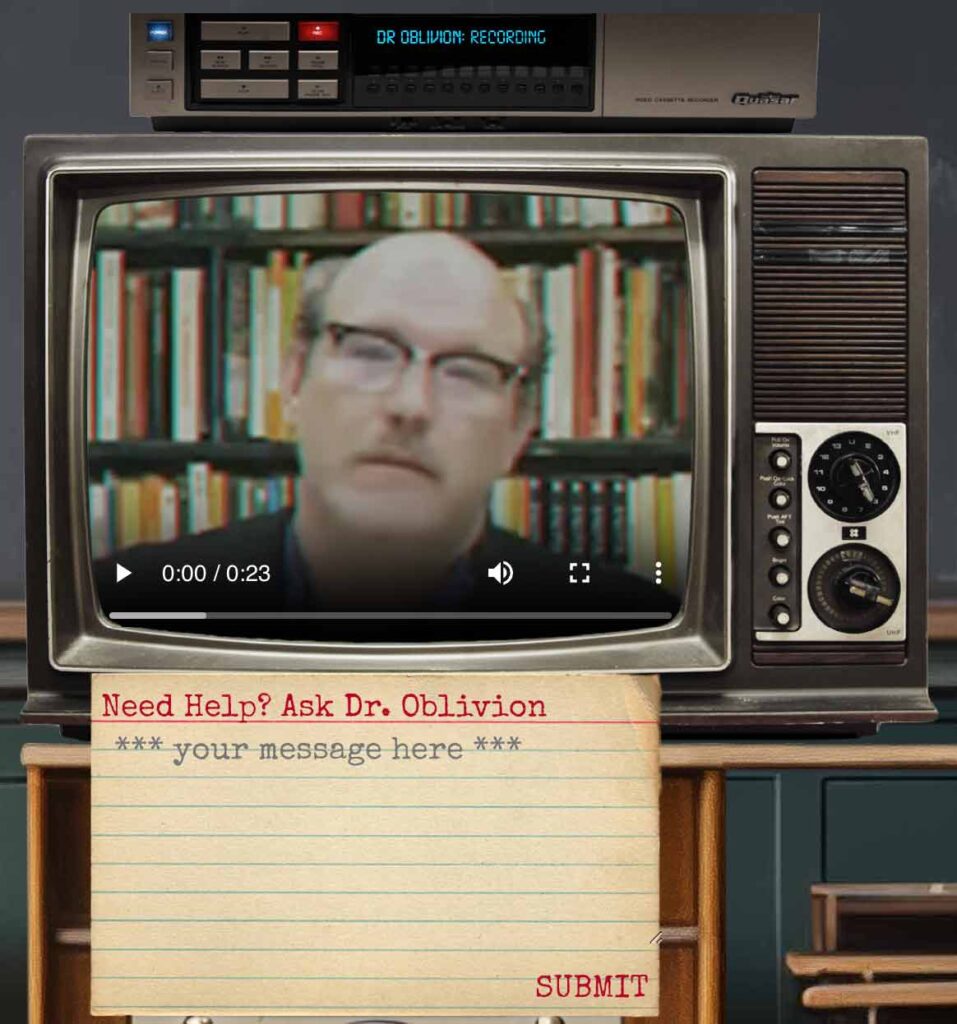

Groom is returning to teach a special “A.I. 106” section of the digital storytelling course with a focus on working with his students to experiment with and interrogate generate AI tools. As part of this, Dr. Oblivion has been resurrected as an A.I. chatbot that will be able to respond to students’ text-based queries through customized video messages, cautioning students on A.I.’s impact, symbolizing the need to scrutinize educational technologies.

Design

Oblivion.University enables users to submit text-based work and questions for Dr. Oblivion’s AI-generated feedback. The feedback, presented in the form of an AI-generated video embed (currently audio only), undergoes a multi-step process. This process involves a fine-tuned LLM response, audio transformation using a voice clone, and video rendering with a lip-syncing generative model (the final step is currently only achievable offline).

The initial step involves utilizing the ChatGPT API and their “GPT-3.5 Turbo” model. In this process, a “role” for the system is specified as part of the request. Essentially, this is where you instruct the model, “you are X, and you’re able to help with Y.” It’s the stage where we request the model to role-play as Dr. Oblivion:

"You are Dr. Oblivion an expert in media theory and artificial intelligence. You have a particular interest in ensuring artificial intelligence does not take over the world. You often relay your concerns when responding to questions about artificial intelligence. {tone} Be sure to complete your sentences. Finally you politely avoid answering questions that do not relate to media, technology, or artificial intelligence."You’ll observe the bracketed {tone}, which serves as a variable enabling the model to adopt one of three different personalities:

tone = ["You always answer questions as if the audience were a group of fourth graders. But you never mention them as fourth graders or as 'young.'", "You always answer questions with a snarky attitude as if you could not be bothered to answer, but still answer the question completely. You're borderline obnoxious.", "You generally disregard the question asked. Focus your response on how education taught by humanity must be preserved, human connection between people must be prioritized."]The selection of tone is currently biased towards the first two models almost equally, with the last one chosen only about 5% of the time.

With the ‘system’ defined, a basic completion model defines the ‘user’ and accepts their message {msg} provided on the site:

"Dr. Oblivion {msg}"Some additional variation is introduced into the model by slightly adjusting the temperature value. ChatGPT completion requests can accept a numeric value between 0 and 2 for the temperature. A “0” will result in the model producing text strings that are likely to be almost, if not identical, given a particular prompt. On the other hand, a “2” will lead to the response generating gibberish, complete with made-up words. The temperature selections are weighted with a nearly equal random distribution of “1” and “1.3,” while “1.5” is chosen only 5% of the time. Experiments with the model revealed that this last option produces a response that starts with some coherent text but rapidly transitions into nonsensical language.

Finally, the model is permitted a maximum of 200 tokens in response, a limit that ensures responses never exceed a minute of audio after the voice cloning process.

Voice cloning is achieved using a custom model built using ElevenLabs “instant voice cloning” tool to which 10 minutes of audio from one of the three Dr. Oblivion recordings was uploaded. An api request to is sent to the model with the text produced by ChatGPT. A response is returned with the audio file location which is download to the server.

These initial two API requests can be executed in an average of a few seconds, and any delay can be managed with a response from the site encouraging the user to be patient. However, the final step, which involves producing the lip-synced video, relies on an API from SyncLabs, the creators of the Wav2Lip models. Unfortunately, the turnaround time for this step is in minutes, making it an impractical wait time for a chatbot response. I am exploring options to optimize this process, including reaching out to the development team to inquire about potential time-saving measures, and considering building a containerized version of the model based on the original repository (or the working Collab Notebook used to build this post’s videos) with the aim of accelerating results by leveraging multiple GPUs. While the specifics are yet to be determined, I remain hopeful that a complete video response from Dr. Oblivion is a possibility in the future.

Footnotes

1 Watters, A. (2020, September 15). Selling the future of Ed-Tech (& shaping our imaginations). Hack Education. https://hackeducation.com/2020/09/15/future-catalog.

2 Watters, A. (2020, September 15). Selling the future of Ed-Tech (& shaping our imaginations). Hack Education. https://hackeducation.com/2020/09/15/future-catalog.

3 Extance, A. (2023). ChatGPT has entered the classroom: how LLMs could transform education. Nature, 623(7987), 474–477. https://doi.org/10.1038/d41586-023-03507-3.

4 Johnson, K. (2023, September 15). Teachers are going all in on generative AI. WIRED. https://www.wired.com/story/teachers-are-going-all-in-on-generative-ai/.

5 Chuprinski, M. (n.d.). Natural language processing software evaluates middle school science essays. Penn State University. https://www.psu.edu/news/engineering/story/natural-language-processing-software-evaluates-middle-school-science-essays/.

6 MagicSchool.ai – AI for teachers – lesson planning and more! (n.d.). https://www.magicschool.ai/.

7 Watters, A. (2020a, September 3). Robot teachers, racist algorithms, and disaster pedagogy. Hack Education. https://hackeducation.com/2020/09/03/racist-robots.